|

|

|

|

|

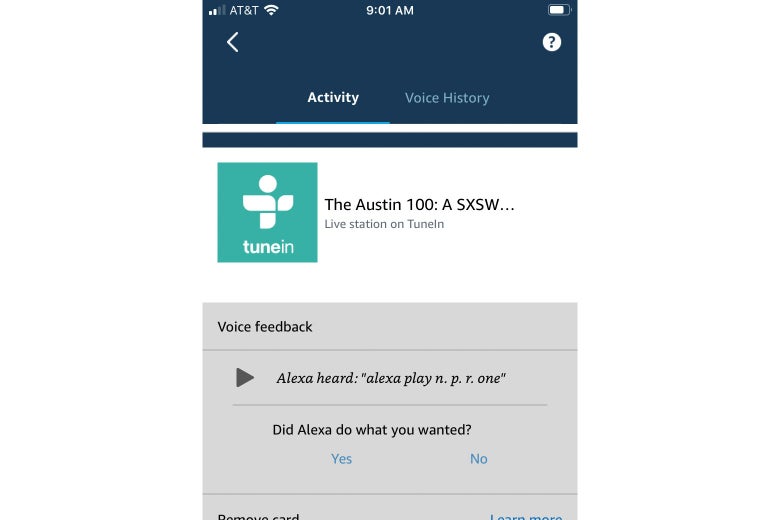

While I was making dinner, I yelled at Alexa. I’m not a yeller, at least not to humans or animals. But the recipe was a little complicated, and I kept having to repeat myself to get the damn Amazon Echo to turn off the timer. And when I used my computer communication voice to ask it to play NPR One so I could catch up on the news—it had been a whole eight or nine minutes since I had checked in with the world—it tried three times to instead play “The Austin 100: A SXSW Mix From NPR Music.”

I feel a little bad about it, remembering Rachel Withers’ (very persuasive!) 2018 piece for Future Tense about why she won’t date men who are rude to Alexa: Advertisement

By default, the Alexa app records all of your interactions—that is, whenever it thinks it has heard the wake word. Much like Nixon’s old tapes, they’re there for your own subsequent listening pleasure, and can be used against you in a court of law. (Not many users realize you can set the recordings for autodelete or ask it to delete what you just said.) Sometimes I scroll through my recent history with Alexa and listen back to myself snapping at the robot. The final “Alexa, off!” is always more aggressive than the first, and as I hear it, Rachel’s wise words echo (sorry) in my mind. For years now, commentators have reminded us that the gendered dynamics of digital assistants are troubling. In September, Future Tense ran an excerpt from The Smart Wife: Why Siri, Alexa, and Other Smart Home Devices Need a Feminist Reboot by Yolande Strengers and Jenny Kennedy . “Friendly and helpful feminized devices often take a great deal of abuse when their owners swear or yell at them. They are also commonly glitchy, leading to their characterization as ditzy feminized devices by owners and technology commentators—traits that reinforce outdated (and unfounded) gendered stereotypes about essentialized female inferior intellectual ability,” they write. That’s me, swearing and yelling at my feminized device even though it only wants to be friendly and helpful. What I tell myself, though, is that I’m really trying to avoid anthropomorphizing the Echo and the rest of the tech in my life. It’s a tendency I’ve had ever since I got to know ELIZA, the chatbot created by an MIT researcher in the 1960s. ELIZA was designed to mimic Rogerian therapy—which basically means that this simple program turns everything you say into a question. For some reason, it was installed on some of the computers in my middle-school library in the ’90s. Most of the time, I tried to get her—I mean it!— to swear, but I also spilled my tweenage heart out occasionally. And I’m not the only one. As a Radiolab episode from 2013 detailed: “At first, ELIZA’s creator Joseph Weizenbaum thought the idea of a computer therapist was funny. But when his students and secretary started talking to it for hours, what had seemed to him to be an amusing idea suddenly felt like an appalling reality.” I tried to talk to ELIZA about my relationship woes recently.

What I realize in the end —with no thanks to ELIZA—is that I can yell at Alexa and no one’s feelings will be hurt. I don’t really want to have strong emotional bonds to a robot that could manipulate me into making a purchase as its A.I. learns mood detection and to speak more naturally. So for now, I’ll keep speaking to Alexa the way I’d never speak to a human. And maybe kids should, too. They need to learn computers are just dumb machines anyway. Meanwhile on Future Tense, former Gov. Jeb Bush called for a nationwide investment—$100 million to start with—to bring broadband internet to the millions of Americans who remain disconnected. Here are some other highlights from the recent past of Future Tense:

Wish We’d Published This“Inside the Strange New World of Being a Deepfake Actor,” Karen Hao, Technology Review Future Tense RecommendsDon’t let this year’s existential terror keep you from some recreational Halloween scares. In Ruth Ware’s thriller The Turn of the Key, a nanny becomes terrified of the remote smarthouse where she is alone with several children. What Next: TBDThis week on Slate’s technology podcast, guest host Celeste Headlee spoke with the Markup’s Lauren Kirchner about the deeply flawed automated background checks used by landlords—and how they can keep people out of homes. Last week, Celeste interviewed Eduardo Gamarra, professor of politics and international relations at Florida International University, about the Spanish-language misinformation that has flooded Florida ahead of the presidential election. Upcoming Future Tense Events• Wednesday, Oct. 14, noon Eastern: Stories of Algorithmic Justice with Future Tense Fiction authors Yudhanjaya Wijeratne (“The State Machine”), Tochi Onyebuchi (“How to Pay Reparations: A Documentary,”) and Holli Mintzer (“Legal Salvage”) and moderator Edward Finn, director of Arizona State University’s Center for Science and the Imagination • Wednesday, Oct. 21, noon: Will We Ever Vote on Our Phones? with Lawrence Norden, director of the Brennan Center for Justice’s Election Reform Program; Kevin Collier, reporter with NBC; Nimit Sawhney, co-founder and CEO of Voatz; and moderator Jane C. Hu, Future Tense contributor • Wednesday, Oct. 28, 11:30 a.m.: Free Speech Project: Do We Need a First Amendment 2.0? with Anne-Marie Slaughter, CEO of New America; Geoffrey R. Stone, Edward H. Levi Distinguished Service professor of law, University of Chicago; Neil Richards, Koch distinguished professor in law, Washington University School of Law; and Jennifer Daskal, professor and faculty director of the Tech, Law, & Security Program at American University Washington College of Law Follow Us

Advertisement

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||